LLM vs AI Search: Understanding Response Generation

Exploring how AI search engines combine LLM capabilities with web search for enhanced responses

Understanding AI-Driven Search

Leading companies define AI-driven search in different ways:

Google: "Unlock entirely new types of questions you never thought Search could answer, and transform the way information is organized."ChatGPT Search: "Get fast, timely answers with links to relevant web sources."Perplexity AI: "Ask any question, and it searches the internet to give you an accessible, conversational, and verifiable answer."

AI search engines leverage Large Language Models (LLMs) as intelligent assistants that can summarize and synthesize information from multiple sources. However, their response generation methods can vary significantly.

The AI Search Process

Query Processing

- User enters initial query

- LLM preprocesses and potentially decomposes the query

- Search performed against web index (Google, Bing, or proprietary)

- LLM analyzes and summarizes results

- Optional follow-up questions generated

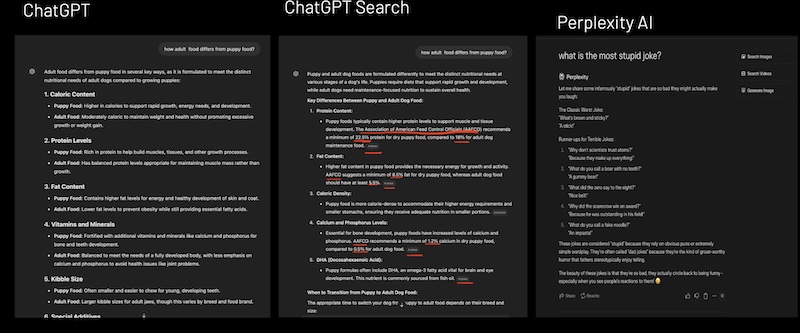

Types of AI Search Responses

Pure LLM Responses

In some cases, AI search engines rely solely on their LLM capabilities without performing a web search. For example:

- General knowledge questions

- Basic explanations

- Common calculations

Web-Enhanced Responses

For queries requiring current or specific information, the system combines:

- Real-time web data

- Multiple authoritative sources

- Specific statistics and quotes

SEO Implications

The rise of AI search engines suggests several key impacts on SEO strategy:

Content Optimization

- Focus on comprehensive, authoritative content

- Implement structured data markup

- Optimize for natural language queries

- Include specific data points and statistics

Series Navigation

Previous Article: AI vs Traditional Search: Source Comparison

Next Article: Where Do LLMs Learn From: Training Data Analysis

Stay Connected

Part of "The Future of SEO in the Age of AI-Driven Search" series.