Technical SEO for AI Search Engines: Implementation Guide

Comprehensive guide to implementing technical SEO for AI search optimization

Understanding Technical SEO for AI Search

The technical foundation of your website plays a crucial role in how AI search engines understand, index, and rank your content. Let's explore the key technical aspects that require attention in the age of AI-driven search.

The Role of robots.txt in AI Search

If want your website to be indexed by AI, you should make sure that your ,/robots.txt is allowing to index your website. You may also restrict specific AI bots from reading and indexing your website. For example, Wall Street Journal's robots.txt restricts many AI bots from reading the website:

User-agent: CCBot

Disallow: /

User-agent: anthropic-ai

Disallow: /

User-agent: cohere-ai

Disallow: /Important Note #1: While robots.txt provides guidelines, compliance is voluntary! Some AI bots may and will disregard these directives. If you want to make sure your content is not visible to AI, implement password protection and restricted access where user must enter password or login to read the website or documents.

Important Note #2: If you want to ensure your website's content is not visible to AI, implement password protection and restricted access where user must enter password or login to read the website or documents.

Server-Side Rendering: A Critical Component

Research Findings

- JavaScript-heavy pages:

~2 weeksfor re-indexing - Static pages:

~2 daysfor re-indexing

Benefits

- Faster indexing by search engines

- Reduced resource requirements

- More reliable content parsing

- Improved AI accessibility

Structured Data Implementation

Common JSON-LD Structures

"WebPage"for general pages"Article"for news and blog posts"HowTo"for instructional content"QAPage"and"FAQPage"for Q&A"Event"for time-based content"Product"for e-commerce

Implementation Benefits

- Clear content context for AI systems

- Better content classification

- Enhanced rich result generation

- Improved indexing speed

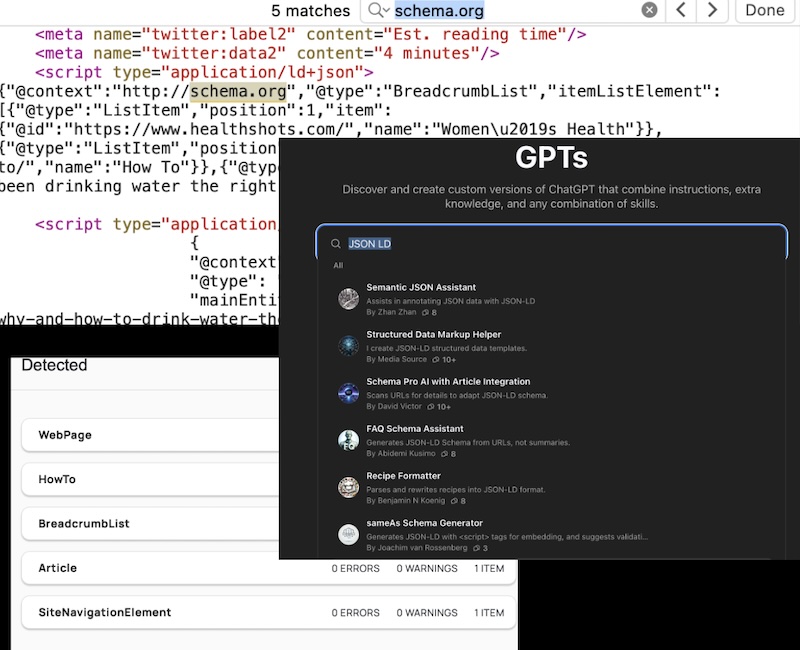

JSON-LD Tools

Finding & Creating

- Search for

"schema.org"in page source - Use CMS plugins

- Generate with AI tools

Validation Tools

There are also tools like GPTs for OpenAI ChatGPT that can help you to quickly generate JSON-LD structure for your web pages:

Real-Time Updates with IndexNow

The IndexNow protocol represents a proactive approach to content indexing. While currently supported by Bing (and by extension, ChatGPT), it's not yet implemented by Google or Perplexity AI.

Competitive Advantages

- Enables faster content indexing

- Reduces crawl burden on servers

- Improves content freshness signals

- Facilitates rapid content updates

Technical Optimization Checklist

1 Server-side Rendering Implementation

- Evaluate current rendering approach

- Measure indexing speed

-

Implement

SSRwhere appropriate - Monitor performance impact

2 Structured Data Integration

- Choose appropriate schema types

-

Implement

JSON-LD - Validate implementation

- Monitor rich result performance

3 IndexNow Protocol Adoption

- Set up API key

- Implement automatic notifications

- Monitor indexing performance

- Track content freshness

Future-Proofing Technical Implementation

As AI search continues to evolve, consider these forward-looking technical considerations:

Infrastructure

- Implement robust content delivery systems

- Prepare for increased structured data requirements

Standards & Monitoring

- Monitor emerging technical standards

- Maintain flexible, adaptable implementations

Pro Tip: Implementation Strategy

Start with the most impactful changes first. Focus on server-side rendering and structured data implementation before moving on to more advanced optimizations like IndexNow protocol adoption.

Key Takeaways

- Prioritize server-side rendering for faster indexing

- Implement comprehensive structured data with JSON-LD

- Consider IndexNow for faster content discovery

- Maintain flexibility for future AI search developments